There is a good article written by Melissa Heikkilä at heise.de called “Wie man KI-generierte Texte erkennen kann / How to recognize AI-generated text”. It discusses the problem of not being able to distinguish between a text written by an AI or by a human.

For me, this is not a problem at the moment.

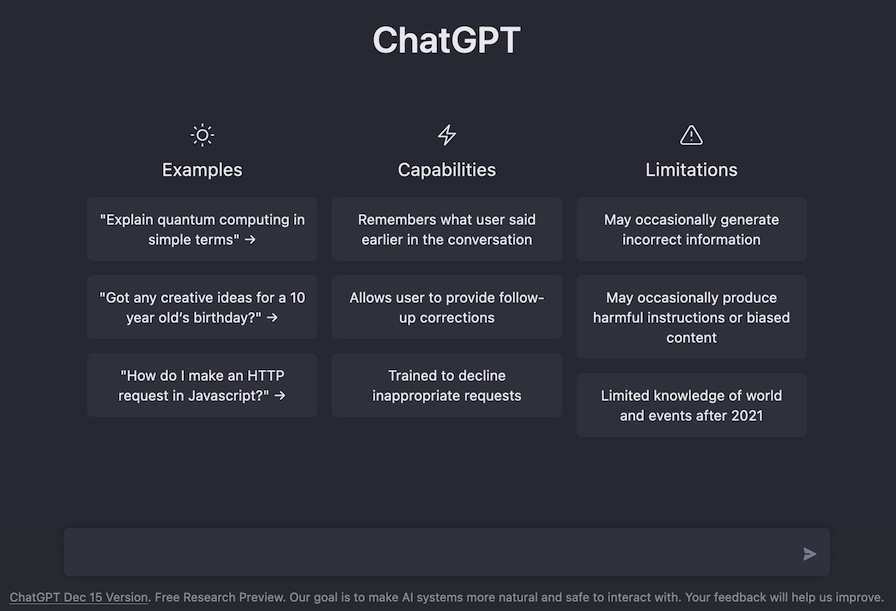

First of all because ChatGPT is not able to know by itself, if the text is correct or not. Because ChatGPT is not “intelligent”. The AI is “predicting the most likely next word in a sentence”. In the article this is described as a “illusion of correctness”.

Secondly ChatGPT is still trained based on a huge dataset and still supervised by humans. This btw. is the reason, why OpenAI is asking you if the answers you received do make sense when using ChatGPT.

Third and most important, you never know if a text is correct or not. Neither written by humans nor by a system. The difference between intelligent mammals and machines is the ability to evaluate data in a way a machine is not able to yet. Humans have empathy, ethic, experience and a logical gut feeling. We can verify if something is correct or not.

One could argue that this is nothing else what a machine could do if it has enough learning data to create experience. True. But as of today there is no machine with the same broad and combined abilities a human being has. And we also know that it will still take years to build a machine with these abilities.

So the question now is if we need some mechanisms or rules, like to urge an author of a text to mark it as “machine created and written” or not. From a comfort point of view I’d say it would be good and it would start a learning process to deal with this new technology. But how about the text’s that are written intentionally wrong by humans? For example to spread false information. Are you always able to know if something is right or wrong? I think no. The author’s point why we need it is because “in an already hugely politically polarized online world, such AI tools could further distort what we consume in terms of content”.

This leads to the main and utmost important advice: one always needs to check the facts from various sources. If you don’t do this you will most probably step into a snakepit and find wrong facts or fiction at the end. The author confirms my thought by writing “we need to learn to recognize signs of AI-written sentences ourselves.”. And finally we should not forget that the Turing-Test is still a very relevant tool to distinguish between a human and an AI (see also the passage “Train and play help to recognize” in the article).

Screenshot taken at: https://chat.openai.com/chat