ML, AI, AI - we are flooded

Hardly any other topic than Artificial Intelligence is discussed so often on various social media platforms at the moment. I am also extremely interested in it and of course I “play around” with the tools. Not only is there ChatGPT (being the best known) for generating text, but also e.g. DreamStudio for generating images based on a description in text form. For example, I “dreamed up” the image above using the following statement in Dreamstudio:

Granted, this isn’t bad already and playing around is fun. All other images in this post (except the AI graphic) are also from Dreamstudio.

At the same time, I gave ChatGPT the following instruction to create a text for this article:

The result was this:

Artificial intelligence (AI) has undergone explosive development in recent years and has now also established itself in the field of corporate learning. Companies are increasingly recognizing the benefits of AI technologies to make employee training and development more effective and personalized.

A good example of this is the use of chatbots such as ChatGPT. ChatGPT can interactively guide employees through training and provide them with personalized recommendations and learning resources. This can not only save time and money, but also help improve learning and motivation.

It is important to note, however, that AI technologies also come with some dangers. One of the biggest challenges is that AI systems are only as good as the data they are trained on. If the data is incomplete or biased, this can cause AI systems to make discriminatory or incorrect decisions.

In addition, there is a risk that AI systems can interfere with the human workplace and lead to unemployment if they automate certain tasks. It is therefore important to use AI systems responsibly and ensure that they support humans and not the other way around.

In summary, AI in corporate learning has great potential to make training and development opportunities for employees more effective and personalized. However, to fully realize the benefits of AI, the dangers must be carefully considered.

DISCLAIMER: Text created with ChatGPT.

This is where it gets problematic. Because I am a layman in terms of corporate learning and have no idea if the statements in this article are correct. I chose this example with regard to corporate learning quite deliberately. I will come to that later. But first I would like to give explanatory information about the terms Machine Learning (ML), Artificial Intelligence (AI) and Deeplearning.

Update: Johannes Starke (see below) has, thankfully, taken a look at the text and has come to the following damning verdict:

The paragraph generated by ChatGPT is, as expected, completely banal. The “benefits” are so generic that they can be applied to almost any learning format/technology. And the statement that personalized learning resources can be PROVIDED, I doubt.

Explanation of terms

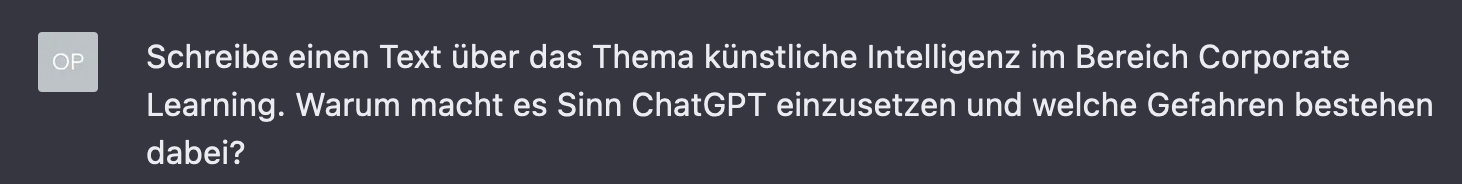

There is often confusion about what AI and ML actually are. In the following diagram I show the different areas and how they are related:

From the graphic can be read:

AI is the generic term for everything related to Artificial Intelligence. One of the largest subfields is Machine Learning, where there are two subfields for learning: The supervised (supervised) and the unsupervised (unsupervised) learning. In the area of supervised learning are the areas of neural networks and deeplearning.

In the following, the terms will be defined in more detail.

Definition AI

Artificial intelligence (AI) is the replication of human analytical and/or decision-making capabilities.

Artificial Intelligence (AI) refers to the development of computers and robots that are capable of behaving in ways that both mimic and exceed human capabilities. AI-powered programs can analyze and contextualize data to provide information or automatically trigger actions without human intervention.

Columbia Engineering, Artificial Intelligence (AI) vs. Machine Learning

Definition Machine Learning

Machine learning is the use of mathematical techniques (algorithms) to analyze data. The goal is to discover useful patterns (relationships or correlations) between different data elements. Once the relationships are identified, they can be used to make inferences about the behavior of new cases as they occur. In essence, this is analogous to the way humans learn.

Machine learning is a pathway to artificial intelligence. This subcategory of AI uses algorithms to automatically gain insights and recognize patterns from data, and applies this learning to make better and better decisions.

Columbia Engineering, Artificial Intelligence (AI) vs. Machine Learning

Supervised and unsupervised learning.

definition supervised learning

Supervised learning, also known as supervised machine learning, is a subcategory of machine learning and artificial intelligence. It is characterized by using data sets that are labeled (marked) to train algorithms that can classify data or accurately predict outcomes.

What is supervised learning? IBM

definition of unsupervised learning

Unsupervised learning, also known as unsupervised machine learning, uses machine learning algorithms to analyze and group data sets that have not been assigned a label (tag). These algorithms discover hidden patterns or data groupings without the need for human intervention. The ability to detect similarities and differences in information makes it an ideal solution for exploratory data analysis, cross-selling strategies, customer segmentation and image recognition.

What is unsupervised learning? IBM

differences

The difference between supervised and unsupervised learning is the data basis used for learning. In supervised learning, the algorithm or model is given “labeled” data to learn from as a basis. The input is known accordingly and so is the correct output. Over time, the machine learns what is right and wrong with its program.

In unsupervised learning, the input and output are not known. The method is used for three domains:

clustering.

It clusters unlabeled data based on their similarities and differences.

Association Rules.

A rules-based method for finding relationships between data in a given data set.

Dimensionality reduction.

This method is used to reduce the amount of data when the data present in it has too many different properties or the dimensions of the data are too wide. It is often used in pre-processing the data within machine learning to increase efficiency.

Definition of neural networks

Neural networks, also known as artificial neural networks (ANN) or simulated neural networks (SNN), are a subfield of machine learning and are at the heart of deep learning algorithms. Their name and structure are inspired by the human brain and mimic the way biological neurons send signals to each other.

An artificial neural network is a computer program in which certain presumed organizational principles of a real neural network (such as the human brain) serve as inspiration.

Jerry Kaplan, Artificial Intelligence - An Introduction, p.45, mitp, 2017

Definition Deep Learning

Deep learning is a subfield of machine learning that is essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain-even though they are far from matching its capabilities-and make it possible to “learn” from large amounts of data. While a neural network with a single layer can still make approximate predictions, additional hidden layers can help optimize and refine accuracy.

Where do we stand?

The above explanations of terms are quite academic and not emotional. That is also important and deliberately written that way. After all, what is Arificial Intelligence not? AI or AI has nothing to do with intelligence in the sense of the intelligence of a human being. Of course, the functionality of our brain serves as a blueprint or template for creating “intelligent algorithms”. In supervised and unsupervised learning, people even talk about the ability to learn and say that the principles are similar to human learning. Nevertheless, the machine still lacks essential characteristics of a human.

One is intuition or gut feeling. We are able to feel whether something can be or not. We are able to think through complex relationships and then come to a conclusion whether something is right or wrong. A machine or a program that uses a machine learning algorithm simply cannot do that (today).

The first emergence of the term “artificial intelligence” in the literature is attributed to John McCarthy, who said in 1955 that the goal was to “develop machines that behave as if they had human intelligence” (Kaplan). In addition, the concept of “Artifical Neurons” was discussed in the 1950s. Then, in the mid-1980s, it was proposed to link multiple Artifical Neurons to solve complex problems (Finlay). This eventually gave rise to the concepts of neural networks and deep learning.

I think it is clear from these explanations that we cannot speak of intelligence in the sense of human intelligence, but a simulation of it. So for the time being, we don’t need to be afraid that the machines will subjugate us.

What does this mean for the use of artificial intelligence?

Media produced by an AI tool.

I recently listened to an excellent episode from the Podcast Lernlust listened to. The title The AI and Us… was promising and the content did not disappoint. Susanne Dube, Johannes Starke and Axel Lindhorst talked about what AI, and in particular ChatGPT, is doing to us. Three perspectives emerge from the podcast in the first part:

- ChatGPT and other tools are exciting and we need to and should engage with them. However, we still know very little.

- We need to explore the tools and how to use them appropriately.

- It must be apparent whether a text, image, audio file, or other digital media was created with an AI tool

I follow all three points without exception. Especially the last point is necessary from my point of view to have an indicator and a call for a very close examination of the media. Johannes Starke goes so far in this point that he does not even want to read text written by a tool like ChatGPT.

However, what we should also not do is completely dismiss these tools and options. As I wrote above, “Artificial Intelligence” has been a topic in the field of Computer Science since the 1950s. The desire to let a machine do things on its own, using the complex structures of our brain as a template, is exciting and can help us extremely in a wide variety of areas. I am thinking of areas such as medical research (e.g., cancer research), geological and climate models, astronomy, and many other areas. We make use of the machine to get better results or to make results and analysis possible in the first place.

On a very personal basis, I myself have already used ChatGPT as an idea generator. E.g. to “brain storm” names or claims for my consulting company (the proximity to Deeplearning … ;-) ). Or to have code snippets created.

In the end, it is necessary to make use of the new technologies with the utmost care. Care here always refers to not taking information or output of an AI tool unchecked.

Are the machines taking over?

No! As long as we establish appropriate testing mechanisms, they won’t. OpenAI, the inventors of ChatGPT and other AI tools have announced end of January 2023 that they are working on such checking mechanisms and will make appropriate tools available. Another article on this topic is A watermark for chatbots can expose text written by an AI.

In addition, I would like to make it clear once again that there is not yet an intelligent machine or program that is anything like human intelligence. The goal of being able to produce a true artificial intelligence will come closer through the establishment of quantum computer technologies, but even with this it is still in the distant future - if achievable at all.

And let’s not forget that Isaac Asimov wrote down the Robot Laws wrote down. As long as we stick to it, nothing can happen to us ;-):

- a robot must not harm humanity or allow humanity to come to harm through passivity.

- a robot may not injure humanity or allow humanity to come to harm through inactivity, unless it thereby violates the zeroth law.

- a robot must obey the orders of humans - unless such orders are in conflict with the zeroth or first law.

- a robot must protect its own existence as long as its actions do not contradict the zeroth, first or second law.

Further reading

There are now a great many articles, posts and contributions on the web. I’m saving myself from making a list of resources here that have probably already been linked elsewhere. However, I would like to point out a small number of what I consider to be good resources.

ChatGPT is fun, but not an author - Science.org (EN). Good and concise article on what ChatGPT is NOT.

Neural Networks and Deep Learning - online book (EN). A scientific textbook to understand the basic principles of neural networks and deep learning.

AI for everyone by Andrew NG. A very good and free online course as an introduction to AI by one of the most famous people in the scientific study of AI.